Accompanying material for the DAFx21 paper “Improving Synthesizer Programming from Variational Autoencoders Latent Space”

Authors: G. Le Vaillant, T. Dutoit, S. Dekeyser

Automatic synthesizer programming

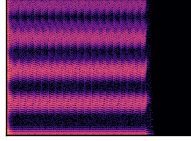

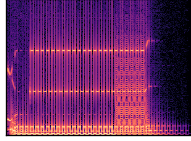

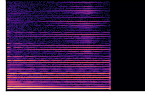

These first examples demonstrate how our model can be used to program a Dexed FM synthesizer (Yamaha DX7 clone) to reproduce a given audio input. Input sounds were generated using original presets from the held-out test set of our 30k presets dataset. Each preset contains 144 learnable parameters.

| Original preset | Inferred presets * | |||

|---|---|---|---|---|

| Parameters learnable representation: | ||||

| Num only | NumCat | NumCat++ | ||

| "Rhodes 3" (harmonic) |

|

|

|

|

| "NeutrnPluk" (harmonic) |

|

|

|

|

| "Vio Solo 1" (harmonic) |

|

|

|

|

| "Bass.Synth" (harmonic) |

|

|

|

|

| "Japanany" (percussive) |

|

|

|

|

| "CongaBongo" (percussive) |

|

|

|

|

| "R2.D2" (sfx) |

|

|

|

|

| "LazerGun" (sfx) |

|

|

|

|

* Some inferred presets were out of tune hence their pitch has been manually adjusted to allow for fair comparisons. The ‘master tune’ Dexed control, which was set to 50% during training, was used after preset inference. This step could be automated using pitch estimation models such as CREPE1 on inferred audio samples.

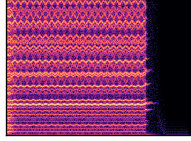

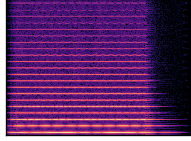

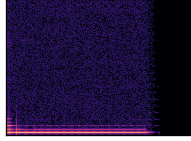

Learning presets from multiple notes

Most synthesizers provide parameters which modulate sound depending on the intensity and pitch of played notes. The paper introduces a convolutional structure for learning these parameters from multi-channel (multiple notes) spectrogram inputs.

The example below shows:

- an original preset from the test set

- a preset inferred by a model trained on single-note input data

- a preset inferred by a model trained on multiple-note input data, which has better learned how the sound should be modulated depending on note intensity

| Note intensity: | 20/127 | 64/127 | 127/127 |

|---|---|---|---|

| Original preset |

|

|

|

| Single-channel input model |

|

|

|

| Multi-channel input model |

|

|

|

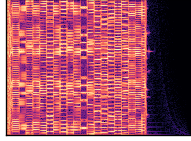

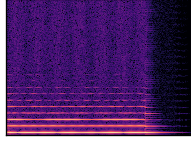

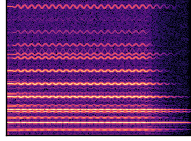

Interpolation between presets

The VAE-based architecture is a generative model which can infer new presets. Compared to previous works, our proposal is able to handle all synthesizer parameters simultaneously and allows to reduce the error on their latent encodings.

The following examples compare interpolations between two presets from the held-out test dataset:

-

The “latent space” interpolation consists in encoding presets into the latent space using the RealNVP2 invertible regression flow in its inverse direction. Then, a linear interpolation is performed on latent vectors, which can be converted back into presets using the regression flow in its forward direction.

-

The “naive” interpolation consists in a linear interpolation between VST parameters.

Interpolation example 1

| Start preset "NylonPick4" |

End preset "FmRhodes14" |

||||||

|---|---|---|---|---|---|---|---|

| Step 1/7 | Step 2/7 | Step 3/7 | Step 4/7 | Step 5/7 | Step 6/7 | Step 7/7 | |

|

Latent space |

|

|

|

|

|

|

|

|

VST parameters (naive) |

|

|

|

|

|

|

|

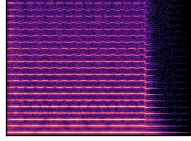

Interpolation example 2

| Start preset "Dingle 1" |

End preset "Get it" |

||||||

|---|---|---|---|---|---|---|---|

| Step 1/7 | Step 2/7 | Step 3/7 | Step 4/7 | Step 5/7 | Step 6/7 | Step 7/7 | |

|

Latent space |

|

|

|

|

|

|

|

|

VST parameters (naive) |

|

|

|

|

|

|

|

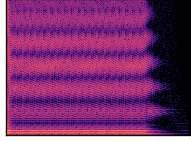

Out-of-domain samples

The following examples show how the model can program the Dexed synthesizer using sounds from acoustic instruments or other synthesizers (with different synthesis abilities).

| Original sound | Inferred preset |

|---|---|

|

|

|

|

|

|

|

|

-

Jong Wook Kim, Justin Salamon, Peter Li, Juan Pablo Bello. “CREPE: A Convolutional Representation for Pitch Estimation”, IEEE International Conference on Acoustics, Speech, and Signal Processing 2018. https://arxiv.org/abs/1802.06182 ↩

-

Laurent Dinh, Jascha Sohl-Dickstein, and Samy Bengio, “Density estimation using Real NVP,” International Conference on Learning Representations, 2017. https://arxiv.org/abs/1605.08803 ↩